In the vision market, we’re really at that initial AI and machine learning phase. AI for inspection excels at locating, identifying, and classifying objects and segmenting scenes and defects, with less sensitivity to image variability or distortion.

Simplifying AI Deployment for Quality Inspection

Simplifying AI Deployment for Quality Inspection

Q&A with Jonathan Hou, Chief Technology Officer | Pleora Technologies

Tell us about Pleora Technologies and your role with the company.

Pleora Technologies is headquartered in Ottawa, Canada and we’ve been a global leader in video and sensor networking in the automation market for 20 years. We’re a founding member of the GigE Vision standard used in the majority of machine vision cameras today. Our first products were interface solutions that enabled Ethernet and USB 3.0 connectivity for automated inspection systems and cameras. Over time our products have been adopted across a wider range of markets, including transportation, security and defense, and medical imaging applications. In the past year we’ve expanded our product focus, and recently introduced an embedded solution that simplifies the deployment of artificial intelligence (AI) and machine learning for inspection systems.

I am the Chief Technology Officer with Pleora, and am responsible for leading our R&D team in developing and delivering innovative and reliable real-time networked sensor solutions for Industry 4.0.

What are some of the opportunities for machine learning and artificial intelligence in quality inspection?

When dealing with quality inspection, it always comes down to how much time are you spending on manual inspection versus automated inspection? The risk and issues with manual inspection can be summarized in the image below – how many black circles can you count?

The next step is to determine how AI fits into automated quality inspection. It’s important to step back and define machine learning and artificial intelligence. AI is the ability for a machine to perform cognitive functions that we associate with our human mind, such as recognizing and learning. Machine learning, a subset of AI, involves coding a computer to process structured data and make decisions without constant human supervision. Once programmed with machine learning capabilities, a system can choose between types of answers and predict continuous values.

Machine learning programs become progressively better as they access more data, but still require human oversight to correct their mistakes. Capturing data to train models for inspection applications in an important step towards getting the best results. You can then take the technology one step further with deep learning, with algorithms that use a wider range of structured and unstructured data to make independent decisions while learning from mistakes and adapting, all without requiring human programming.

In the vision market, we’re really at that initial AI and machine learning phase. AI for inspection excels at locating, identifying, and classifying objects and segmenting scenes and defects, with less sensitivity to image variability or distortion. AI algorithms are also more easily adapted to identify different types of defects or meet unique pass/fail tolerances based on requirements for different customers, without rewriting code.

Can you summarize the difference between classic computer vision and AI?

In a classic computer vision application, a developer manually tunes an algorithm for the job to be done. This can require significant customization if products A and B have different quality thresholds. Food inspection and quality grades, for example, can change for different customers or market requirements. You also have to incorporate the “human” factor in terms of determining what’s good or bad? Algorithm inaccuracies may generate excessive false positives that stop production and force costly manual secondary inspection, or missed errors that result in defective or poor quality products going to market.

Similarly, AI algorithm training has traditionally required multiple time-consuming steps and dedicated coding to input images, label defects, and optimize models. More recently, companies are developing a “no code” approach to training that allows users to upload images and data captured during traditional inspection to software that automatically generates AI algorithms, with minimal human input.

In reality, it’s not computer vision versus AI. The best approach integrates both methods. We believe in utilizing the tried and tested algorithms from classical computer vision, overlaid with some AI to improve the overall performance of the system for the end-user. It’s what we call the “Hybrid AI” approach and is a more logical upgrade than a full replacement of existing systems.

How is AI being used in quality inspection?

We’ve been working with companies to add AI capabilities to print, pharmaceutical, consumer/industrial goods, and food inspection applications. Some of these are traditional defect detection applications, where AI can be used to inspect for a wider range of defect types.

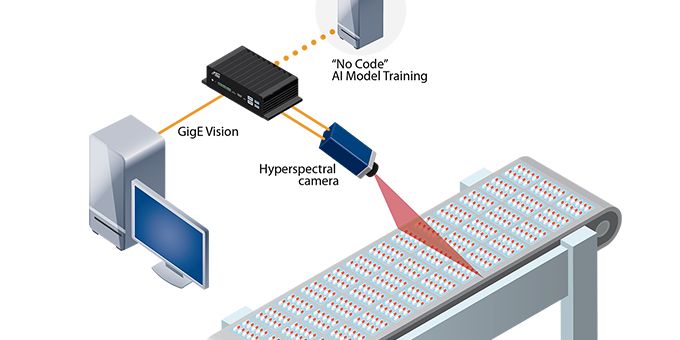

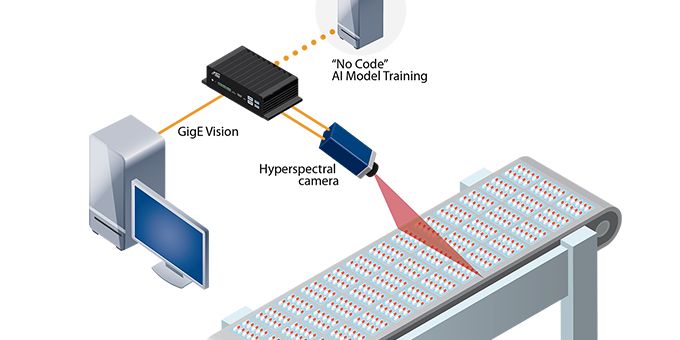

In the food and pharmaceutical market, suppliers are looking for a cost-effective way to deploy hyperspectral imaging so they can gain greater insight into their products. For pill inspection, hyperspectral imaging can detect subtle differences in ingredients to ensure the right dosage is being delivered to users. AI an also inspect that pills have the same physical appearance, such as white coating. One of the more unique applications is commercial linen cleaning, as an example for hotels and restaurants, where hyperspectral AI can detect stains that will require additional cleaning processes.

Both applications are complex scenarios that are difficult to solve with traditional visible cameras. Hyperspectral cameras can analyze multiple bands that we cannot see, however, interpreting the data is complex and a challenge. This is where AI provides a user with an easy way to train on the captured images, then deploy an algorithm into real-world applications that can interpret the data without any coding.

The other area where there is significant interest in AI is visual quality inspection applications. With a “no code” approach to training a vision system to find defects or classify objects, the end-user can define the criteria of what to look for without need to know how to program. The user simply captures images, labels the data, trains and deploys in their factory; significantly speeding up the time-to-market to deploy AI-based quality inspection.

Finally, the approach of upgrading existing systems in a factory using AI is interesting. You can leverage existing systems and infrastructure and deploy AI as either a pre-processor or post-processing technique to reduce false positives and rejects and save on time and material waste while maintaining high quality standards.

.png)

What are some of the deployment challenges for AI?

We see three common challenges while working with organizations deploying AI. Most are unsure how to deploy new AI capabilities alongside existing infrastructure and processes. This is especially true in inspection systems, where there are significant investments in cameras, specialized sensors, and analysis software. Companies need to find an approach where they can deploy AI in line with their existing systems, rather than looking at costly replacements of the equipment that already exists. Related to this, there are well-established and proven processes for end-users; companies can’t afford the cost or time to retrain all their staff on a new technology or user interfaces. Finally, the cost and complexity of algorithm training is also a concern for businesses evaluating AI.

Tell us about your AI Gateway and how it is solving some of these challenges?

Pleora’s AI Gateway simplifies the deployment of advanced machine learning capabilities to improve the reliability and lower the cost of visual quality inspection. The solution works with existing inspection hardware and software, meaning an organization can add AI capabilities without replacing equipment or processes. Our DNA in vision standards and processing with GigE Vision powers the technology behind the AI Gateway to allow the device to be able to speak to cameras, sensors, and applications that already exist in factories. This means users can upgrade an existing system at a substantially lower cost. The AI Gateway acts a transparent bridge that provides edge AI processing to cameras on the production floor. Existing applications simply “see” the AI Gateway as a regular machine vision camera with added AI and image processing capabilities.

“No code” algorithm training and pre-packaged plug-in AI capabilities allow organizations to more easily and cost-effectively deploy AI capabilities. We also provide a framework so organizations can deploy custom image processing and open source AI algorithms written in Python. This way companies get the benefit of a fully vendor supported and unified device platform, with the flexibility to leverage open source technologies like OpenCV and TensorFlow in Python to deploy as “plug-ins” for their projects. Companies can focus on the AI and image processing algorithm deployment and rely on the AI Gateway to take care of the rest in terms of camera interfacing, management, and deployment.

Finally, the AI Gateway bridges the gap between machine vision systems and other machinery in the factory by supporting next-generation Industry 4.0 protocols, such as OPC-UA. This means inspection results can be passed to other machines to help drive decisions and automation.

How does the AI Gateway fit into an inspection application?

Most of the companies we’re working with want AI to fit seamlessly within their existing infrastructure and processes. With “no code” training, images and data are uploaded to learning software on a PC that is optimized for the NVIDIA GPU in Pleora’s AI Gateway. AI models are transferred and deployed on the AI Gateway for production environments.

The AI Gateway handles image acquisition from any vision standard-compliant image source and sends out the processed data over GigE Vision to inspection and analysis platforms. This means end-users can avoid vendor lock-in while maintaining infrastructure, processes, and analysis software. You can plug-in any GigE Vision-compliant camera from any vendor, rather than developing on a custom camera vendor platform and be dependent on specific SDKs or imaging libraries. It also allows developers to rely on open image processing technologies, such as OpenCV and TensorFlow, to have more control on the algorithms when required.

In a quality inspection application, for example, the AI Gateway intercepts the camera image feed and applies the selected plug-in skills. The gateway then sends the AI processed data to the inspection application, which seamlessly receives the video as if it were still connected directly to the camera. Similarly, the AI Gateway can process imaging data with loaded plug-in skills in parallel to traditional processing tools. If a defect is detected, processed video from the AI Gateway can confirm or reject results as an automated secondary inspection.

The results from the inspection can also be communicated with other devices using protocols like OPC-UA to enable the next level of automation in factories.

What is an AI plug-in?

Plug-ins skills for the AI Gateway are out-of-the-box solutions and sample code that allow end-users and developers to add intelligence to visual inspection applications and simplify the deployment of customized capabilities, without requiring any additional programming.

We’re partnering with AI and imaging experts to develop these plug-ins. For example, the Inspection Plug-in developed with Neurocle simplifies deep-learning based classification, segmentation, OCR and object detection capabilities. Working with perClass, we have developed a Hyperspectral Plug-in that provides an easy way to leverage advanced inspection capabilities for challenging applications.

In addition, we’re developing plug-ins for face detection and social distancing applications, as well as sample code for image comparison, QR code detection, object counting with OPC-UA, and more. These plug-ins are simple Python scripts that you can modify or add to the device – we chose Python as our plug-in language because of its simplicity for non-programmers and its popularity in the image processing and AI community. We provide full source code for all samples, which are updated and added on a continuous basis, so that developers and integrators can get started easier and faster on their vision projects and deploy quicker by basing their projects on these samples. They simply write a Python plug-in script and upload to the device, and the AI Gateway takes care of applying this to the video stream.

In addition, we’re developing plug-ins for face detection and social distancing applications, as well as sample code for image comparison, QR code detection, object counting with OPC-UA, and more. These plug-ins are simple Python scripts that you can modify or add to the device – we chose Python as our plug-in language because of its simplicity for non-programmers and its popularity in the image processing and AI community. We provide full source code for all samples, which are updated and added on a continuous basis, so that developers and integrators can get started easier and faster on their vision projects and deploy quicker by basing their projects on these samples. They simply write a Python plug-in script and upload to the device, and the AI Gateway takes care of applying this to the video stream.

Look ahead a few years, where do you see AI & Machine vision and their role in quality inspection?

As solutions like our AI Gateway start to ease cost and complexity concerns for organizations, we’re really only at the initial stages of AI deployment. Looking ahead, one of the promises of Industry 4.0 and Internet of Things is the notion of connected devices sharing data and using AI to make more autonomous decisions. The ability of AI will increase rapidly as more data becomes available. Interesting early conversations we’ve had with companies are exploring the concept of connected factories, where an organization can leverage a cloud, shared data, and AI skills across global sites.

This enables “Quality 4.0” applications where plants from multi-national and global companies can start sharing AI models and data and standardize on the types of defects in quality inspection. This could extend from their internal production to include external contract manufacturers to ensure consistent quality throughout the entire supply chain.

We’re seeing the eventual goal as “lights out automation” where quality inspection systems can drive decisions through sensor networking and protocols across connected machines. This roadmap is described in our “Machine Vision 4.0” maturity model that we developed to help companies evaluate where they are now and what the future looks like. It’s a roadmap we’re following to ensure we’re providing the next generation of technologies, hardware and software.

.png)

The content & opinions in this article are the author’s and do not necessarily represent the views of ManufacturingTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product